Abstract

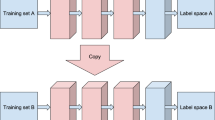

As shown in the bibliography, training an ensemble of networks is an interesting way to improve the performance with respect to a single network. However there are several methods to construct the ensemble. In this paper we present some new results in a comparison of twenty different methods. We have trained ensembles of 3, 9, 20 and 40 networks to show results in a wide spectrum of values. The results show that the improvement in performance above 9 networks in the ensemble depends on the method but it is usually low. Also, the best method for a ensemble of 3 networks is called “Decorrelated” and uses a penalty term in the usual Backpropagation function to decorrelate the network outputs in the ensemble. For the case of 9 and 20 networks the best method is conservative boosting. And finally for 40 networks the best method is Cels.

This research was supported by the project MAPACI TIC2002-02273 of CICYT in Spain.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Tumer, K., Ghosh, J.: Error correlation and error reduction in ensemble classifiers. Connection Science 8(3&4), 385–404 (1996)

Raviv, Y., Intrator, N.: Bootstrapping with Noise: An Effective Regularization Technique. Connection Science 8(3&4), 355–372 (1996)

Drucker, H., Cortes, C., Jackel, D., et al.: Boosting and Other Ensemble Methods. Neural Computation 6, 1289–1301 (1994)

Fernández-Redondo, M., Hernández-Espinosa, C., Torres-Sospedra, J.: Classification by multilayer feedforward ensembles. In: Yin, F.-L., Wang, J., Guo, C. (eds.) ISNN 2004. LNCS, vol. 3173, pp. 852–857. Springer, Heidelberg (2004)

Verikas, A., Lipnickas, A., Malmqvist, K., Bacauskiene, M., Gelzinis, A.: Soft Combination of neural classifiers: A comparative study. Pattern Recognition Letters 20, 429–444 (1999)

Oza, N.C.: Boosting with Averaged Weight Vectors. In: Windeatt, T., Roli, F. (eds.) MCS 2003. LNCS, vol. 2709, pp. 15–24. Springer, Heidelberg (2003)

Kuncheva, L.I.: Error Bounds for Aggressive and Conservative Adaboost. In: Windeatt, T., Roli, F. (eds.) MCS 2003. LNCS, vol. 2709, pp. 25–34. Springer, Heidelberg (2003)

Breiman, L.: Arcing Classifiers. Annals of Statistic 26(3), 801–849 (1998)

Liu, Y., Yao, X., Higuchi, T.: Evolutionary Ensembles with Negative Correlation Learning. IEEE Trans. On Evolutionary Computation 4(4), 380–387 (2000)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Torres-Sospedra, J., Hernández-Espinosa, C., Fernández-Redondo, M. (2005). Ensembles of Multilayer Feedforward: Some New Results. In: Cabestany, J., Prieto, A., Sandoval, F. (eds) Computational Intelligence and Bioinspired Systems. IWANN 2005. Lecture Notes in Computer Science, vol 3512. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11494669_74

Download citation

DOI: https://doi.org/10.1007/11494669_74

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-26208-4

Online ISBN: 978-3-540-32106-4

eBook Packages: Computer ScienceComputer Science (R0)